This blog post was written by Moritz Mähr (University of Basel / University of Bern) and Moritz Twente (University of Basel). It is part of a series on current developments in open data in the humanities and social sciences in Switzerland, produced for the infoclio.ch 2025 conference «Open Science in History». The series presents various recent projects, highlights resources available online, and offers reflections on the topic.

A hands-on workshop held on 30 April 2025 at the University of Zurich outlined why historians and other humanities scholars can—and should—take publishing back into their own hands. Organised by DSI Digital Humanities Community’s Dr Moritz Mähr and joined by Prof Michael Piotrowski, Dr Moritz Feichtinger and Dominic Weber, the event weighed the costs of today’s journal market against emerging, community-led alternatives. The discussion moved from technical fixes such as preprint servers and research-data repositories to cultural questions about labour, credit and trust in the age of AI. What follows distils the day’s argument into a roadmap for researchers, librarians and funders who want Open Access (OA) to mean more than “free-to-read PDF.”

The Case Against Legacy Publishing and the Crisis of Peer Review

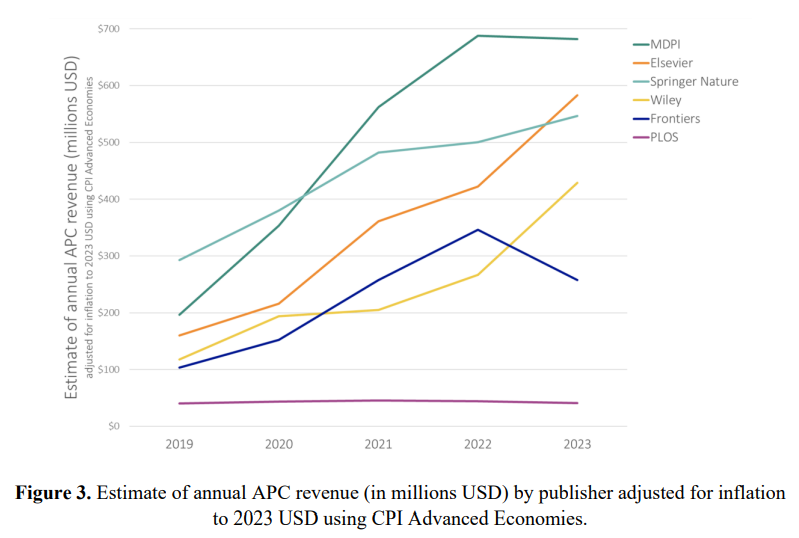

Commercial journal bundles and APC-funded “gold” OA have brought costs down for readers but not for authors, departments or libraries. Studies of scholar-led models show they remain a small fraction of the market, overshadowed by publishers whose profit rates rival those of Big Tech. Early-career researchers face the steepest bill: time pressure, submission fees and a culture of “publish or perish” that punishes experimental work. In short, academic publishing still depends on infrastructures designed for print economics.

Michael Piotrowski’s input focused on the fundamental weaknesses of the current publishing system. Drawing on recent analyses such as Haseeb Irfanullah’s “Peer Review Has Lost Its Human Face. So, What’s Next?”, Piotrowski highlighted how commercialisation, fragmentation, and opaque review processes not only inflate costs but also undermine trust, especially as AI-generated content proliferates. He argued that current incentives—‘publish or perish’ cultures, paywalls, and centralised reputational economies—act as structural obstacles to experimental, collaborative and community-led work. For Piotrowski, the crisis is not just technical or economic but fundamentally epistemic: the humanities must reclaim editorial and evaluative authority if they wish to remain credible knowledge communities.

Peer review is widely accepted as the guardian of academic quality, yet evidence of inconsistency and retraction rates suggests the guardrail is wobbly. A comprehensive meta-study reports that reviewers disagree markedly across disciplines. At the same time, publishers are battling an influx of AI-generated or brokered manuscripts that evade detection software and erode trust. Platforms such as PubPeer and F1000 show that open commentary can surface problems more quickly than closed reports, but incentives to share data or code remain weak.

Moritz Feichtinger expanded this diagnosis by questioning whether “open” is enough without deeper reforms. Referring to the critical essay "The Rise and Fall of Peer Review", he drew attention to unresolved issues in open science: the fragility of reputation systems, the lack of formal credit for review work, and the persistent asymmetries in who controls or benefits from open infrastructures. Feichtinger argued for community-driven peer review models that genuinely distribute authority, reward sustained engagement, and embed review, reputation and sustainability within the technical design of publishing platforms.

Towards Community-Driven Open Access: Technical and Cultural Pathways

Workshop demonstrations stressed that publishing in the digital humanities rarely ends with prose only. Interactive maps, computational notebooks and interoperable datasets all need homes that guarantee citability and preservation.

During the session, Moritz Mähr showed how the Stadt.Geschichte.Basel project chains Hypotheses for DOI-enabled blogging with DaSCH and Zenodo for archival snapshots.

- DaSCH, Switzerland’s FAIR-compliant national humanities repository, promises long-term storage of complex objects.

- Hypotheses hosts thousands of peer-reviewed academic blogs and offers DOI-minting.

- Zenodo offers DOI-minting deposits for software, data and papers, with versioning baked in.

Mähr’s technical input underlined that sustainable open publishing depends not only on free tools but on workflows that are transparent, interoperable and community-governed. By demonstrating the practical chaining of repositories and blogging platforms, he made clear how technical infrastructure, persistent identifiers, and community curation must co-evolve—allowing researchers to maintain agency over their work while building trust in the wider scholarly record.

Several participants pointed to preprint servers like arXiv.org, philpapers.org etc. that are adopted quite broadly in certain disciplines. Humanities projects could adopt the same architecture, adding fields for TEI-XML editions or IIIF image manifests. The idea is not to replace journals overnight but to create a feedback layer—a sandbox where ideas receive community review long before journal submission.

The participant Thomas Leibundgut (Co-Koordinator, Open Science, swissuniversities) highlighted that while Switzerland leads in preprint adoption in many fields, uptake in the humanities remains low. He stressed—drawing on current studies and surveys—that integrating preprints into journal workflows and providing formal recognition are the most promising strategies to increase adoption, with mandates and training also playing a role. Leibundgut’s reflections underscored the workshop’s consensus: humanities preprinting requires tailored, community-driven solutions.

“Open” is too often equated with “free labour.” Community-driven OA must confront who pays for server maintenance, metadata curation and the emotional work of constructive review. Scholars at the workshop echoed findings that editorial and technical work deserves formal credit lines, badges or even micro-CV entries that hiring committees can recognise. Without such signals, openness risks reproducing the same inequalities it set out to cure.

Platforms such as Programming Historian or Zeitschrift für digitale Geisteswissenschaften (ZfdG) show that open, comment-rich peer review is viable—and can even shorten turnaround times when reviewers know their assessments will be read by colleagues. Ideas floated in Zurich ranged from GitHub-style pull requests to Stack Overflow-like up-votes, where datasets, code and prose are evaluated by distinct reviewer pools. Transparency not only boosts accountability; it also teaches early-career scholars how to review by example.

Diamond OA journals, governed and financed by public funds whose distribution is delegated to the scientific community rather than APCs, illustrate how technical capacity, governance and legal frameworks must co-evolve. Participants argued for a Swiss coalition of universities, libraries and learned societies to underwrite a humanities-wide repository cluster: preprints, datasets, software containers and overlay journals stitched together by persistent identifiers and open-source code. Such a federation would spread costs, pool expertise and allow each discipline to customize editorial workflows without reinventing the wheel.

The Future: Publishing as Iterative, Collaborative Scholarship

Digital scholarship rarely finishes at “version of record.” Versioning, post-publication commentary and living datasets are already standard in software development; extending them to historiography foregrounds scholarship as a process, not a product. When iterative publishing is normalised, citation metrics lose some of their gatekeeping power, making room for peer recognition based on the usefulness, not just the novelty, of a contribution. Review becomes mentorship; publishing becomes collaboration.

The Zurich workshop closed on a pragmatic note. Start small: deposit data in Zenodo, invite open comments on Hypotheses, and volunteer as a reviewer on a community platform. Lobby departments and funders to value these acts in hiring and promotion. If enough historians and humanities scholars treat openness not as volunteerism but as mutual investment, Switzerland could host a humanities preprint ecosystem by the end of the decade.

For now, the question is not whether we can afford to experiment with community-driven publishing, but whether we can afford not to. The reputational stakes of research integrity, the financial stakes of subscription inflation and the epistemic stakes of AI-generated noise all point in the same direction: scholars must take charge of how their work circulates—or risk losing the conversation altogether.